PERFORMANCE BOOST: REPORTING RELIABILITY UP 12½ PERCENT

I’ve recently been leading a project for a large government organisation to improve the timeliness and reliability of its regular corporate reporting. Their reports involve assimilating a sizeable number of Key Performance Indicators (KPIs) from disparate sources into a monthly report.

While it’s still early days, initial indications are that the project is a success with improved delivery of data and KPIs, and more time available for report review and quality assurance.

Let’s look at how this was achieved.

How did the project unfold?

The first step was to get as clear a fix on the situation as possible. This involved gathering figures on actual data delivery times for the last six months. It also involved thinking through what the actual issues were, of which there were two: data delivery was late and/or inconsistent, and some data items were reported in arrears, affecting the perceived currency of the report. Clearly identifying these two separate – but related – issues ensured each was dealt with.

As I’m fond of quoting: clearly stating a problem goes a long way towards solving it. This is impressed on me anew with every performance improvement project I undertake.

Following this I summarised the key issues, which centred on identifying a subset of four KPIs where delivery was inconsistent enough to throw report preparation timelines out of whack. The reasons for KPIs being in arrears were unpacked and compared to other comparable jurisdictions, and an evaluation made of whether it was feasible to bring these out of arrears.

I then scoped a number of options to improve KPI collection and reporting, gathering input and ideas from the team who collect the KPIs and prepare the monthly reports. For example, one idea put forward was to automate the follow-up for late-provided KPIs. Finally, I evaluated the options and scoped next steps.

The whole effort was written up on one double-sided sheet of A3 paper, drawing on a series of supporting spreadsheets.

What tools were used?

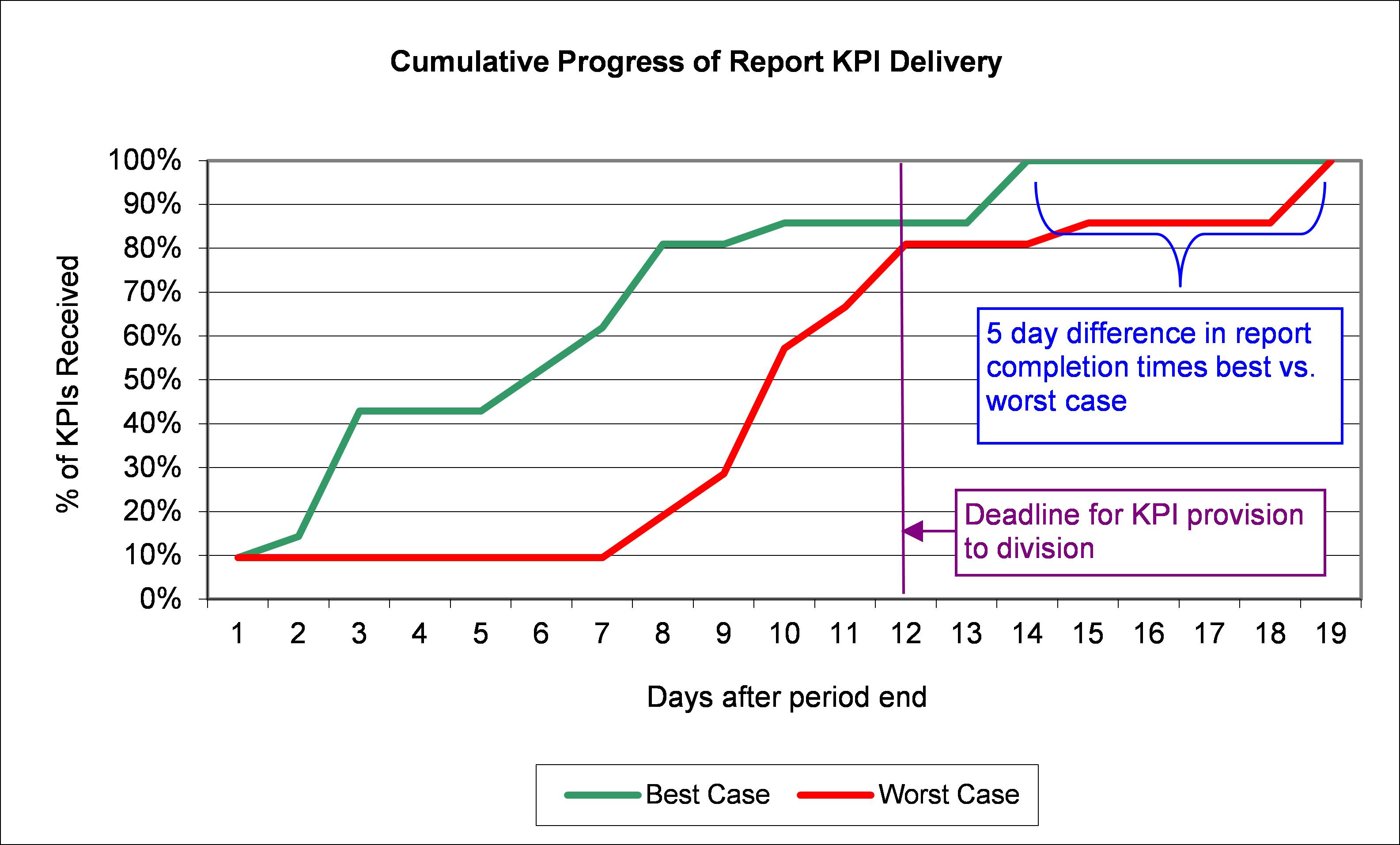

I used various forms of data analysis to define and clarify the problem, including an adapted form of Pareto analysis, and analysis of variation in delivery of KPIs. An example of one of the graphs from the improvement effort is shown below (organisational details have been removed).

An informal form of benchmarking was used to help size up the issues relating to reporting in arrears.

What benefits were possible?

The analysis found that for the four KPIs whose delivery was inconsistent (and therefore jeopardised the completion of the report as a whole) month-to-month on-time delivery – which was considered to be achievable – equated to an increase in reliability of 12½ percent. The analysis concluded that it is possible to replicate the best observed performance every month, leading to improved delivery timeliness with all KPIs received two days earlier than the average, creating extra time for review and thereby enhancing the overall quality of the report. Early indications are that these benefits are on the way to being realised.

What made the improvement project work?

The project was able to produce these results because of the combination of two factors: fact-gathering and quantitative analysis on the one hand, coupled with a collaborative and participatory approach to generating improvement ideas on the other. The latter engaged key stakeholders at the coalface, who were the ones to actually implement the recommendations and carry them forward. The combination of facts-based analysis and a participatory approach to improvement idea generation is powerful, allowing for solutions which were not only generated and deployed quickly, but also durable enough to stay in place (because the implementers were engaged).

Another factor contributing to the project’s success was the presence of an independent analyst and facilitator (me) who brought objectivity and a fresh pair of eyes to the exercise, and guided the process along a structured path that held off from jumping straight to solutions before the issues were sufficiently scoped.

Finally, the concise and punchy nature of the A3 report highlighted the findings in quantitative terms and created a clear pathway to the solutions. This engaged and reassured time-poor senior executives who wanted clear quick wins supported by analysis and evidence-based decisions.

If you’re interested in achieving quick performance wins backed by evidence-based decision making please contact me by emailing info@mcarmanconsulting.com or phone me on 0414 383 374.

A rapid improvement project such as the one above can be implemented at relatively short notice, completed within 1 to 2 months, and produce tangible results that positively influence Boards, Ministers, Chief Executives and regulators (among others). These projects can be conducted for customer-facing services, compliance activities, corporate support functions, or back-office processes, to name a few. Isn’t there an area of your organisation that could benefit from a quick performance boost?

* * *

Regards, Michael Carman

Director | Michael Carman Consulting

PO Box 686, Petersham NSW 2049 | M: 0414 383 374 | W: www.mcarmanconsulting.com

P.S. It’s time to start organising strategic planning workshops: please contact me by emailing info@mcarmanconsulting.com or on phone 0414 383 374 if you would like assistance with your organisation’s planning effort or for me to facilitate your planning workshop.

© Michael Carman 2010-2012