HOW DATA SMART IS YOUR ORGANISATION?

Recently I was working with a medium sized business applying analytics to improve their top-line revenue. I had enthusiastic agreement from the MD who was supportive and committed.

There was only one problem: we couldn’t extract the data. Individual customer histories could be extracted, but not a full report of all customers, which is what we needed for the analysis.

Everyone knows the old saying with analysis that if you put garbage in, you get garbage out. But we didn’t have anything (not even garbage) to put into the analysis. A minor detail!

I’m struck by the yawning chasm between the lightning-fast capability of modern everyday computers and software on the one hand, and the precarious nature of the management processes needed to support an analytics effort. By which I mean collecting data, entering it, cleansing it, massaging it for analysis, and then actually analysing it. Never mind interpreting the results, applying them, and changing business practices accordingly.

Just for kicks not long agoI ran a regression with 10,000 data points and eight variables on a two-year old, low-end Toshiba laptop running Windows 7 with a 32-bit processor, using Microsoft Excel. I clocked the computer crunching the task at 2.25 seconds. It took me longer to fabricate the random data than it took an inexpensive computer to analyse it.

Compare that to the weeks, often months, of effort required for an organisation to get itself together collating, analysing, interpreting and applying data. It’s a journey worthy of Frodo Baggins on a bad day …

This is not a criticism, just an observation on the management reality needed to make analytics efforts work. But it serves to open up the question: How analytically sophisticated is your organisation?

The Four Types of Analytical Sophistication

I’ve been thinking about this recently, since this issue seems to loom so large for so many organisations. The answer to the question How able is your organisation to capture, manage, interpret and use information? takes us back to the fuzzy realm of culture, leadership, the amount of organisational ‘looseness’ or ‘tightness’ and so forth. The success of the technical analytics rests on decidedly non-technical factors such as whether management and staff are committed to collecting data, whether siloing prevent different divisions sharing data and cooperating, whether there is accountability and follow-up, and whether or not the analysis is used to improve performance.

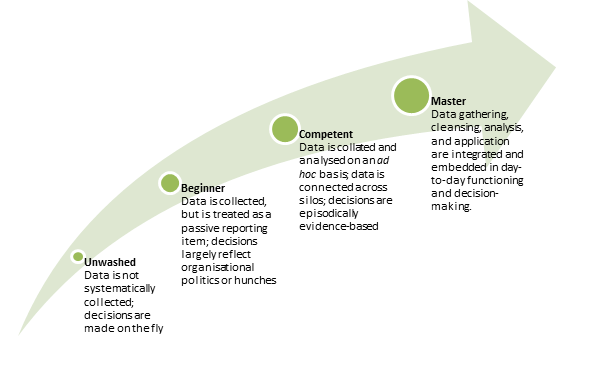

I distinguish four levels of analytical sophistication:

Unwashed

Beginner

Competent

Master.

These are shown in the graphic below.

© Michael Carman 2015

Most organisations are Beginners. Some edge their way into Competent, and only very few fall into the Master category: Amazon, Intermountain Healthcare, Capital One and Caesar’s Entertainment would be classified as Masters.

It’s possible for an organisation to work its way through the categories; however a Master organisation could have evidence-based decision-making at its core from the outset.

Analytical Mastery Close-Up

Let me give you a taste of what’s entailed in being in the Master category.

For one thing, someone takes on the hack work (that’s what it is) entailed in collating and cleansing data. I wrote a couple of months back about leading analysis-based health provider Intermountain Healthcare. One key player in Intermountain’s quality-improvement area observed that there needs to be a constant focus on data gathering, which was described (accurately, in my experience) as “painstakingly mundane work that almost no one takes to naturally.” This is the cost of having hard, evidence-based decisions: the effort in collating hard evidence.

There must be a clearly defined business goal. Higher conversion rates, enhanced cross sales, lower unit costs, lower lost-time injury rate, reduced customer attrition, lower morbidity … you get the idea. These goals should be crisp and specific – don’t get too high-brow. Analytics is always in the service of higher performance: trying to apply it in the absence of a clearly formulated goal will lead to results that are academic and of passing interest, at best. At worst you’ll be drowning in analysis which takes you nowhere, distracting people in the service of a ‘data bureaucracy’.

Masters tackle problems using a rigorous empirical approach, oftentimes using business experiments (‘test and learn’) to make decisions. For example in one year Capital One ran 28,000 experiments (this is not a typo: the number is twenty-eight thousand) of new products, new advertising approaches, new markets and new business models, enabling it to make the right offer at the right time to the right customer.

Coupled with that is real discipline in the conduct of experiments. Caesar’s Entertainment CEO Gary Loveman – who is something of a guru in business analytics – once quipped that there are three ways to get fired from Caesar’s: theft, sexual harassment, and running an experiment without a control group.

This rigorous approach is embedded in the organisation’s processes rather than being an add-on that occurs on an episodic, project basis. Results are fed back to operations, so the analysis thus influences performance.

* * *

It goes without saying that Mastery is something to aim for – the organisations that play in this space have produced spectacular results. But if that seems too much of a stretch, I recommend systematically doing what you can to edge your way to the Competent level. And if you’d like some assistance getting there, please contact me.

Regards,

Michael

Director I Michael Carman Consulting

PO Box 686, Petersham NSW 2049 I M: 0414 383 3740414 383 374

© Michael Carman 2015